Back in September last year I chose to back the

StarFive VisionFive 2 on

Kickstarter. I don t have a particular use in mind for it, but I felt it was one of the first RISC-V systems that were relatively capable (mentally I have it as somewhere between a Raspberry Pi 3 + a Pi 4). In particular it s a quad

1.5GHz 64-bit RISC-V core with 8G RAM, USB3, GigE ethernet and a single M.2 PCIe slot. More than ample as a personal machine for playing around with RISC-V and doing local builds. I ended up paying 67 for the Early Bird variant (dual GigE ethernet rather than 1 x 100Mb and 1 x GigE). A couple of weeks ago I got an email with a tracking number and last week it finally turned up.

Being impatient the first thing I did was plug it into a monitor, connect up a keyboard, and power it on. Nothing except some flashing lights. Looking at the boot selector DIP switches suggested it was configured to boot from UART, so I flipped them to (what I thought was) the flash setting. It wasn t - turns out the ON marking on the switches represents logic 0 and it was correctly setup when I got it. I went to read the documentation which talked about writing an image to a MicroSD card, but also had details of the UART connection. Wanting to make sure the device was at least doing something before I actually tried an OS on it I hooked up a USB/serial dongle and powered the board up again. Success!

U-Boot appeared and I could interact with it.

I went to the

VisionFive2 Debian page and proceeded to torrent the Image-69 image, writing it to a MicroSD card and inserting it in the slot on the bottom of the board. It booted fine. I can t even tell you what graphical environment it booted up because I don t remember; it worked fine though (at 1080p, I ve seen reports that 4K screens will make it croak).

Poking around the image revealed that it s built off a

snapshot.debian.org snapshot from

20220616T194833Z, which is a little dated at this point but I understand the rationale behind picking something that works and sticking with it. The kernel is of course a vendor special, based on 5.15.0. Further investigation revealed that the entire X/graphics stack is living in

/usr/local, which isn t overly surprising; it s Imagination based. I was pleasantly surprised to discover there is work to

upstream the Imagination support, but I m not planning to run the board with a monitor attached so it s not a high priority for me.

Having discovered all that I decided to see how well a clean Debian unstable install from

Debian Ports would go. I had a spare

Intel Optane lying around (it s a stupid 22110 M.2 which is too long for

any machine I own), so I put it in the slot on the bottom of the board. To my surprise it Just Worked and was detected ok:

# lspci

0000:00:00.0 PCI bridge: PLDA XpressRich-AXI Ref Design (rev 02)

0000:01:00.0 USB controller: VIA Technologies, Inc. VL805/806 xHCI USB 3.0 Controller (rev 01)

0001:00:00.0 PCI bridge: PLDA XpressRich-AXI Ref Design (rev 02)

0001:01:00.0 Non-Volatile memory controller: Intel Corporation NVMe Datacenter SSD [Optane]

I created a single partition with an ext4 filesystem (initially tried btrfs, but the StarFive kernel doesn t support it), and kicked off a debootstrap with:

# mkfs -t ext4 /dev/nvme0n1p1

# mount /dev/nvme0n1p1 /mnt

# debootstrap --keyring=/etc/apt/trusted.gpg.d/debian-ports-archive-2023.gpg \

unstable /mnt https://deb.debian.org/debian-ports

The u-boot setup has a convoluted set of vendor scripts that eventually ends up reading a

/boot/extlinux/extlinux.conf config from

/dev/mmcblk1p2, so I added an additional entry there using the StarFive kernel but pointing to the NVMe device for

/. Made sure to set a root password (not that I ve been bitten by that before, too many times), and rebooted. Success! Well. Sort of. I hit a bunch of problems with having a getty running on

ttyS0 as well as one running on

hvc0. The second turns out to be a console device from the

RISC-V SBI. I did a

systemctl mask serial-getty@hvc0.service which made things a bit happier, but I was still seeing odd behaviour and output. Turned out I needed to reboot the initramfs as well; the StarFive one was using Plymouth and doing some other stuff that seemed to be confusing matters. An

update-initramfs -k 5.15.0-starfive -c built me a new one and everything was happy.

Next problem; the StarFive kernel doesn t have IPv6 support. StarFive are good citizens and make their

5.15 kernel tree available, so I grabbed it, fed it the existing config, and tweaked some options (including adding IPV6 and SECCOMP, which chrony wanted). Slight hiccup when it turned out trying to do things like make sound modular caused it to fail to compile, and having to backport the fix that allowed the use of GCC 12 (as present in sid), but it got there. So I got cocky and tried to update it to the latest 5.15.94. A few manual merge fixups (which I may or may not have got right, but it compiles and boots for me), and success. Timings:

$ time make -j 4 bindeb-pkg

[linux-image-5.15.94-00787-g1fbe8ac32aa8]

real 37m0.134s

user 117m27.392s

sys 6m49.804s

On the subject of kernels I am pleased to note that there are efforts to upstream the VisionFive 2 support, with what appears to be multiple members of StarFive engaging in multiple patch submission rounds. It s really great to see this and I look forward to being able to run an unmodified mainline kernel on my board.

Niggles? I have a few. The provided u-boot doesn t have NVMe support enabled, so at present I need to keep a MicroSD card to boot off, even though root is on an SSD. I m also seeing some errors in dmesg from the SSD:

[155933.434038] nvme nvme0: I/O 436 QID 4 timeout, completion polled

[156173.351166] nvme nvme0: I/O 48 QID 3 timeout, completion polled

[156346.228993] nvme nvme0: I/O 108 QID 3 timeout, completion polled

It doesn t seem to cause any actual issues, and it could be the SSD, the 5.15 kernel or an actual hardware thing - I ll keep an eye on it (I will probably end up with a different SSD that actually fits, so that ll provide another data point).

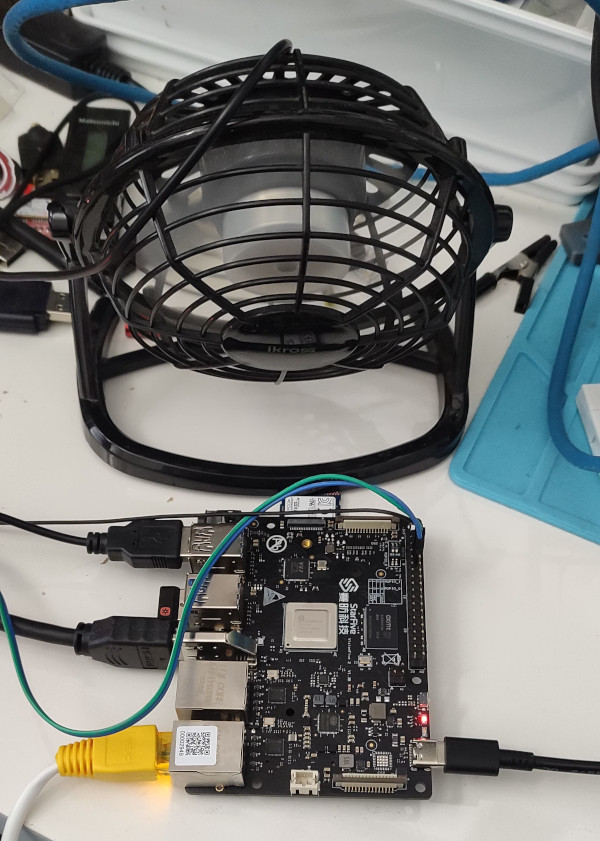

More annoying is the temperature the CPU seems to run at. There s no heatsink or fan, just the metal heatspreader on top of the CPU, and in normal idle operation it sits at around 60 C. Compiling a kernel it hit 90 C before I stopped the job and sorted out some additional cooling in the form of a desk fan, which kept it as just over 30 C.

I haven t seen any actual stability problems, but I wouldn t want to run for any length of time like that. I ve ordered a heatsink and also realised that the board supports a Raspberry Pi style PoE Hat , so I ve got one of those that includes a fan ordered (I am a complete convert to PoE especially for small systems like this).

With the desk fan setup I ve been able to run the board for extended periods under load (I did a full recompile of the Debian 6.1.12-1 kernel package and it took about 10 hours). The M.2 slot is unfortunately only a single PCIe v2 lane, and my testing topped out at about 180MB/s. IIRC that is about half what the slot should be capable of, and less than a 10th of what the SSD can do. Ethernet testing with

iPerf3 sustained about 941Mb/s, so basically maxing out the port. The board as a whole isn t going to set any speed records, but it s perfectly usable, and pretty impressive for the price point.

On the Debian side I ve not hit any surprises. There s work going on to move RISC-V to a proper release architecture, and I m hoping to be able to help out with that, but the version of unstable I installed from the ports infrastructure has looked just like any other Debian install. Which is what you want. And that pretty much sums up my overall experience of the VisionFive 2; it s not noticeably different than any other single board computer. That s a good thing, FWIW, and once the kernel support lands properly upstream (it ll be post 6.3 at least it seems) it ll be a boring mainline supported platform that just happens to be RISC-V.

It was brought to my attention recently that the mobile viewing experience of this blog was not exactly what I d hope for. In my poor defence I proof read on my desktop and the only time I see my posts on mobile is via FreshRSS. Also my UX ability sucks.

Anyway. I ve updated the theme to a more recent version of minima and tried to make sure I haven t broken it all in the process (I did break tagging, but then I fixed it again). I double checked the generated feed to confirm it was the same (other than some re-tagging I did), so hopefully I haven t flooded anyone s feed.

Hopefully I can go back to ignoring the underlying blog engine for another 5+ years. If not I ll have to take a closer look at Enrico s staticsite.

It was brought to my attention recently that the mobile viewing experience of this blog was not exactly what I d hope for. In my poor defence I proof read on my desktop and the only time I see my posts on mobile is via FreshRSS. Also my UX ability sucks.

Anyway. I ve updated the theme to a more recent version of minima and tried to make sure I haven t broken it all in the process (I did break tagging, but then I fixed it again). I double checked the generated feed to confirm it was the same (other than some re-tagging I did), so hopefully I haven t flooded anyone s feed.

Hopefully I can go back to ignoring the underlying blog engine for another 5+ years. If not I ll have to take a closer look at Enrico s staticsite.

Back in September last year I chose to back the

Back in September last year I chose to back the  I haven t seen any actual stability problems, but I wouldn t want to run for any length of time like that. I ve ordered a heatsink and also realised that the board supports a Raspberry Pi style PoE Hat , so I ve got one of those that includes a fan ordered (I am a complete convert to PoE especially for small systems like this).

With the desk fan setup I ve been able to run the board for extended periods under load (I did a full recompile of the Debian 6.1.12-1 kernel package and it took about 10 hours). The M.2 slot is unfortunately only a single PCIe v2 lane, and my testing topped out at about 180MB/s. IIRC that is about half what the slot should be capable of, and less than a 10th of what the SSD can do. Ethernet testing with

I haven t seen any actual stability problems, but I wouldn t want to run for any length of time like that. I ve ordered a heatsink and also realised that the board supports a Raspberry Pi style PoE Hat , so I ve got one of those that includes a fan ordered (I am a complete convert to PoE especially for small systems like this).

With the desk fan setup I ve been able to run the board for extended periods under load (I did a full recompile of the Debian 6.1.12-1 kernel package and it took about 10 hours). The M.2 slot is unfortunately only a single PCIe v2 lane, and my testing topped out at about 180MB/s. IIRC that is about half what the slot should be capable of, and less than a 10th of what the SSD can do. Ethernet testing with  So, it s this weird time of year where we make a balance and share

with the world some ideas about the future. And yes, it s time to

take care of this blog, as its activity has dropped once

again. So maybe it d be nice to start this post by checking how

much have I blogged over the years:

So, it s this weird time of year where we make a balance and share

with the world some ideas about the future. And yes, it s time to

take care of this blog, as its activity has dropped once

again. So maybe it d be nice to start this post by checking how

much have I blogged over the years:

This has ended up longer than I expected. I ll write up posts about some of the individual steps with some more details at some point, but this is an overview of the yak shaving I engaged in. The TL;DR is:

This has ended up longer than I expected. I ll write up posts about some of the individual steps with some more details at some point, but this is an overview of the yak shaving I engaged in. The TL;DR is:

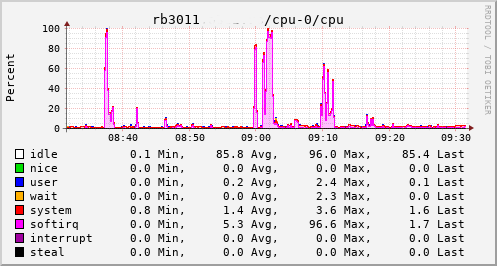

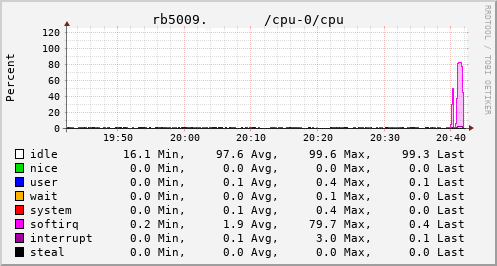

This provided an opportunity to see just what the RB3011 could actually manage. In the configuration I had it turned out to be not much more than the 80Mb/s speeds I had previously seen. The upload jumped from a solid 20Mb/s to 75Mb/s, so I knew the regrade had actually happened. Looking at CPU utilisation clearly showed the problem; softirqs were using almost 100% of a CPU core.

Now, the way the hardware is setup on the RB3011 is that there are two separate 5 port switches, each connected back to the CPU via a separate GigE interface. For various reasons I had everything on a single switch, which meant that all traffic was boomeranging in and out of the same CPU interface. The IPQ8064 has dual cores, so I thought I d try moving the external connection to the other switch. That puts it on its own GigE CPU interface, which then allows binding the interrupts to a different CPU core. That helps; throughput to the outside world hits 140Mb/s+. Still a long way from the expected max, but proof we just need more grunt.

This provided an opportunity to see just what the RB3011 could actually manage. In the configuration I had it turned out to be not much more than the 80Mb/s speeds I had previously seen. The upload jumped from a solid 20Mb/s to 75Mb/s, so I knew the regrade had actually happened. Looking at CPU utilisation clearly showed the problem; softirqs were using almost 100% of a CPU core.

Now, the way the hardware is setup on the RB3011 is that there are two separate 5 port switches, each connected back to the CPU via a separate GigE interface. For various reasons I had everything on a single switch, which meant that all traffic was boomeranging in and out of the same CPU interface. The IPQ8064 has dual cores, so I thought I d try moving the external connection to the other switch. That puts it on its own GigE CPU interface, which then allows binding the interrupts to a different CPU core. That helps; throughput to the outside world hits 140Mb/s+. Still a long way from the expected max, but proof we just need more grunt.

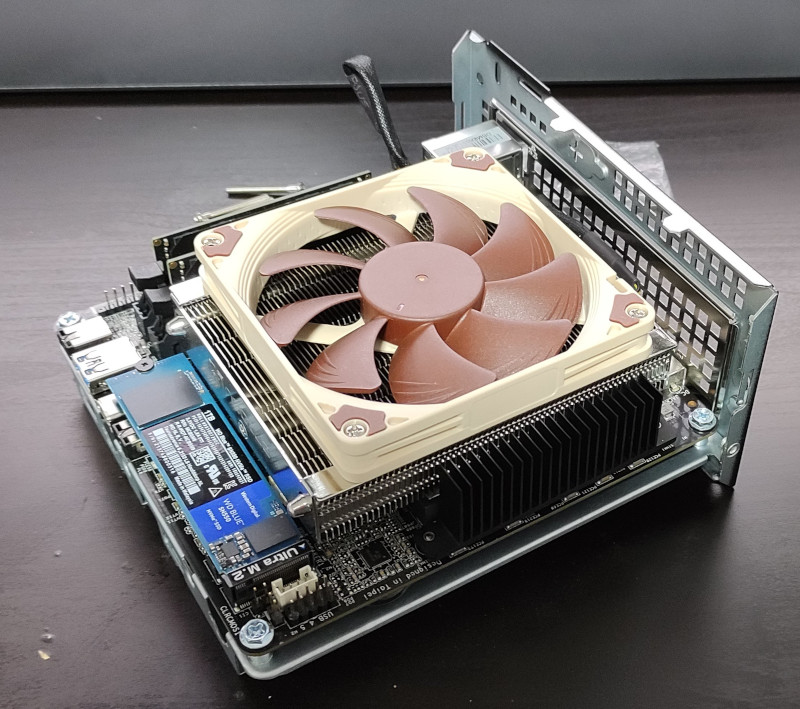

Which brings us to this past weekend, when, having worked out all the other bits, I tried the squashfs root image again on the RB3011. Success! The home automation bits connected to it, the link to the outside world came up, everything seemed happy. So I double checked my bootloader bits on the RB5009, brought it down to the comms room and plugged it in instead. And, modulo my failing to update the nftables config to allow it to do forwarding, it all came up ok. Some testing with iperf3 internally got a nice 912Mb/s sustained between subnets, and some less scientific testing with wget + speedtest-cli saw speeds of over 460Mb/s to the outside world.

Time from ordering the router until it was in service? Just under 8 weeks

Which brings us to this past weekend, when, having worked out all the other bits, I tried the squashfs root image again on the RB3011. Success! The home automation bits connected to it, the link to the outside world came up, everything seemed happy. So I double checked my bootloader bits on the RB5009, brought it down to the comms room and plugged it in instead. And, modulo my failing to update the nftables config to allow it to do forwarding, it all came up ok. Some testing with iperf3 internally got a nice 912Mb/s sustained between subnets, and some less scientific testing with wget + speedtest-cli saw speeds of over 460Mb/s to the outside world.

Time from ordering the router until it was in service? Just under 8 weeks

It s been over 20 months since the first COVID lockdown kicked in here in Northern Ireland and I started working from home. Even when the strict lockdown was lifted the advice here has continued to be If you can work from home you should work from home . I ve been into the office here and there (for new starts given you need to hand over a laptop and sort out some login details it s generally easier to do so in person, and I ve had a couple of whiteboard sessions that needed the high bandwidth face to face communication), but day to day is all from home.

It s been over 20 months since the first COVID lockdown kicked in here in Northern Ireland and I started working from home. Even when the strict lockdown was lifted the advice here has continued to be If you can work from home you should work from home . I ve been into the office here and there (for new starts given you need to hand over a laptop and sort out some login details it s generally easier to do so in person, and I ve had a couple of whiteboard sessions that needed the high bandwidth face to face communication), but day to day is all from home.

My

My